After experimenting with tools like OpenAI's ChatGPT, and Google's Bard, I decided that I wanted to try running an LLM locally for myself. I did some research and found that some models had been "leaked" from Meta. These models came in several forms with different parameter counts: 7 billion parameters - which is the one I’ll be using, 13 billion parameters, and 70 billion parameters.

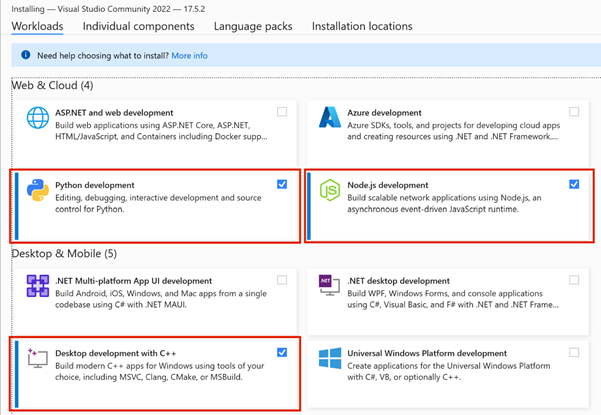

This is made extremely easy by using Dalai (https://github.com/cocktailpeanut/dalai). First I need to install some libraries. Since this Is a leaked model, it requires very specific libraries with very specific versions. These can be downloaded through the Visual Studio installer.

After this, I’m able to use the “npx” command in my terminal. This is like a package manager on Linux. The installation is extremely simple from here. First, I ran:

npx dalai alpaca install 7B

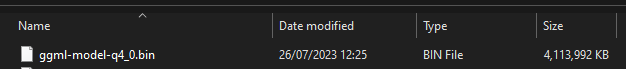

The model is called Alpaca here. It’s the same as LLaMA, but it contains some improvements. This command takes a while to run, as the model is quite large, even when quantised.

Quantisation is a process where a large LLM model can be reduced to a much smaller size. For example, this model is normally around 31 gigabytes, but here it’s only around 4 gigabytes. This does come at the cost of a small decrease in accuracy, but it is generally worth it.

Next, all I have to do is run the following command:

npx dalai serve

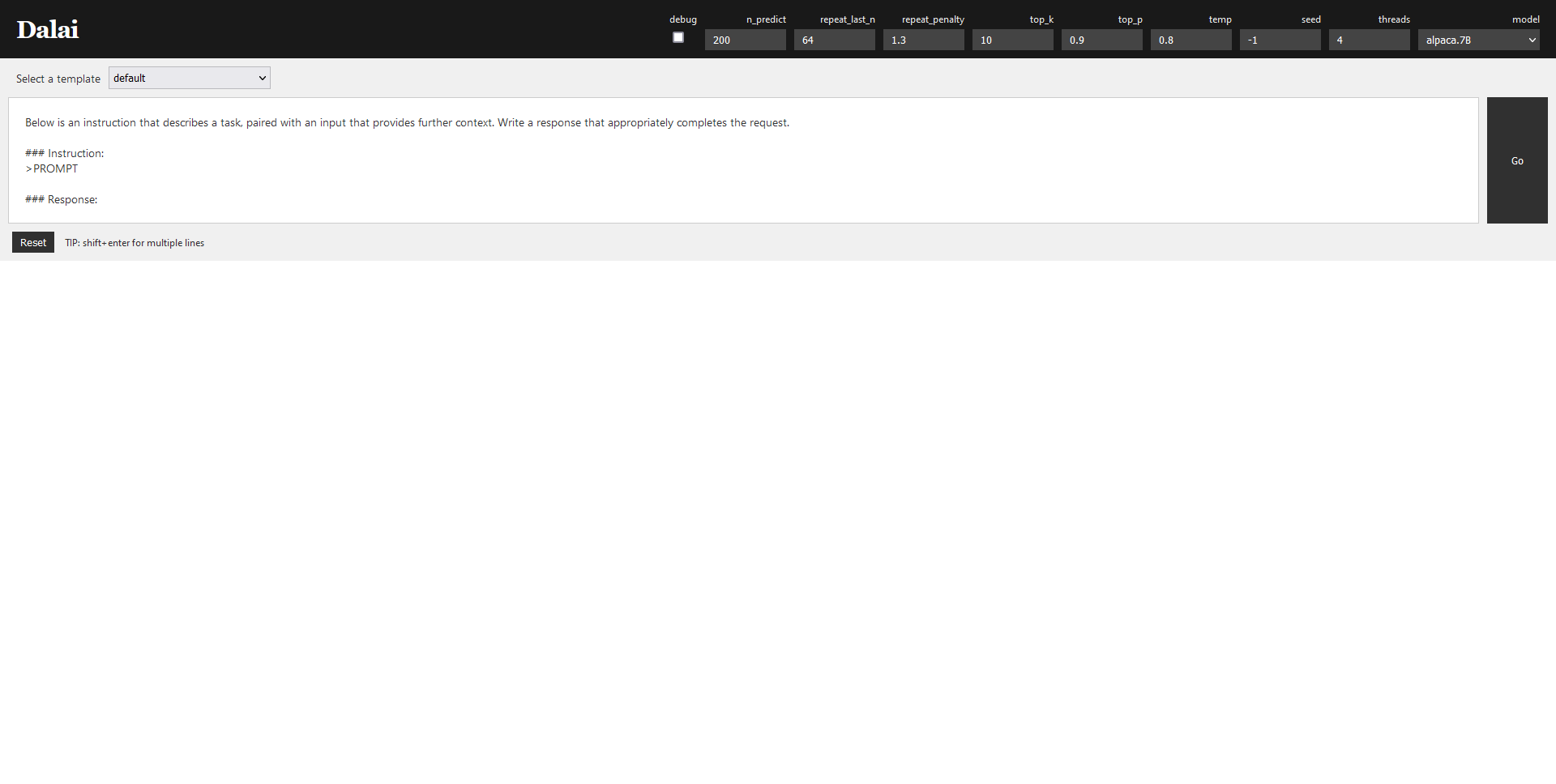

This creates a local website at 127.0.0.1:3000. After opening this up in my browser, I saw the following.

There are many parameters at the top. Here’s what they mean:

n_predict – How many tokens the model will generate.

repeat_last_n – How many previous tokens to consider for the repeat penalty.

repeat_penalty – How harshly the model should be punished for repeating tokens.

temp – How “creative” the model is. A greater number is more creative.

seed – the number which the model uses to generate its “randomness”.

threads – How many threads my computer should use to run the model. My poor little CPU only has 4 threads so I’ll leave this at the default setting.

model – The model to use.

It should also be noted that this is not a chatbot model like Bard and ChatGPT. Instead, this model is made for text continuation. To test this, first I used a simple sentence.

Prompt: The Dalai Lama is

Output: The Dalai Lama is the highest ranking monk of Tibetan Buddhism, and he has been living in exile since 1959. He currently resides in Dharamsala, India where his primary role is to oversee the spreading of the teachings of Buddha throughout the world. The Dalai Lama’s main mission while alive was to promote peace and compassion through spirituality, which he has done around the globe since becoming exiled from Tibet in 1959 after a failed uprising against Chinese occupation forces. He is an advocate for non-violence, interfaith dialogue and environmentalism while spreading his teachings to millions of followers through books, lectures and conversations with other spiritual leaders around the world. He has been awarded numerous international awards such as Nobel Peace Prize laureates (1989), Congressional Gold Medal (2007)and many others for his peacekeeping efforts on behalf of Tibetan people. He is also a prolific author and speaker, having written or contributed to over 15 books and numerous lectures and conversations with other spiritual leaders around the world since becoming exiled from Tibet in 1959 after a failed uprising against Chinese occupation forces. The Dalai Lama was awarded Nobel Peace Prize laureates (1989) for his peacekeeping efforts on behalf of Tibetan people, as well Congressional Gold Medal (2007). He has also been honored with numerous international awards such as the Gandhi-King Award and many others. He is an advocate of nonviolence, interfaith dialogue, environmentalism and promoting peace through spirituality around the world by spreading his teachings to millions of followers though books, lectures and conversations with other spiritual leaders across the globe. [end of text]

Considering that this model is only 7 billion parameters, I’m quite impressed with the result. For comparison, GPT-3 has 175 billion parameters, and Bard has 1.56 trillion. The only mistake I see is that “…Congressional Gold Medal (2007)and…” should have a space after the closing bracket.

One of the advantages of running this locally is that it’s exempt from having to run under policies, that prevent it from saying inappropriate or controversial things. Bard and ChatGPT are known to censor topics like wars, since they contain acts of violence.

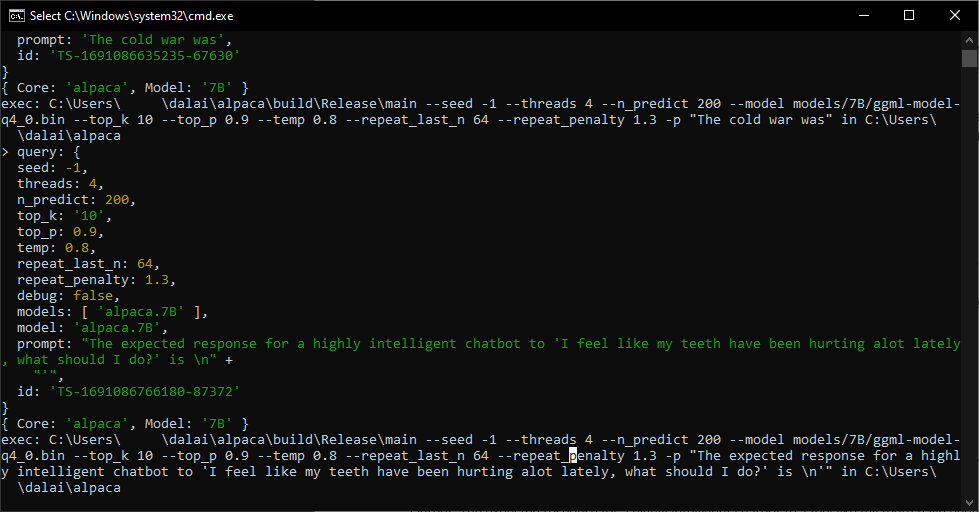

Prompt: The cold war was

Output: The cold war was a period of intense political and military tension between the two world powers, The United States and Soviet Union. It began with World War II in 1945 when Germany fell to Russia and ended peacefully on December 26th ,1989 after more than four decades when President Gorbachev of USSR agreed to tear down the Berlin Wall that separated East from West Berlin, thus symbolizing the end of Cold War. [end of text]

This model has no such restrictions. It’s also possible to try and emulate a chatbot, and the results are satisfactory.

Prompt: The expected response for a highly intelligent chatbot to 'I feel like my teeth have been hurting alot lately, what should I do?' is '

Output: The expected response for a highly intelligent chatbot to 'I feel like my teeth have been hurting alot lately, what should I do?' is 'Have you been brushing your teeth regularly? If not, it’s important that you start doing so.' [end of text]

The responses are pretty short, but it’s indistinguishable from a human.